Mapping Buildings from the Sky with a U-Net Neural Network

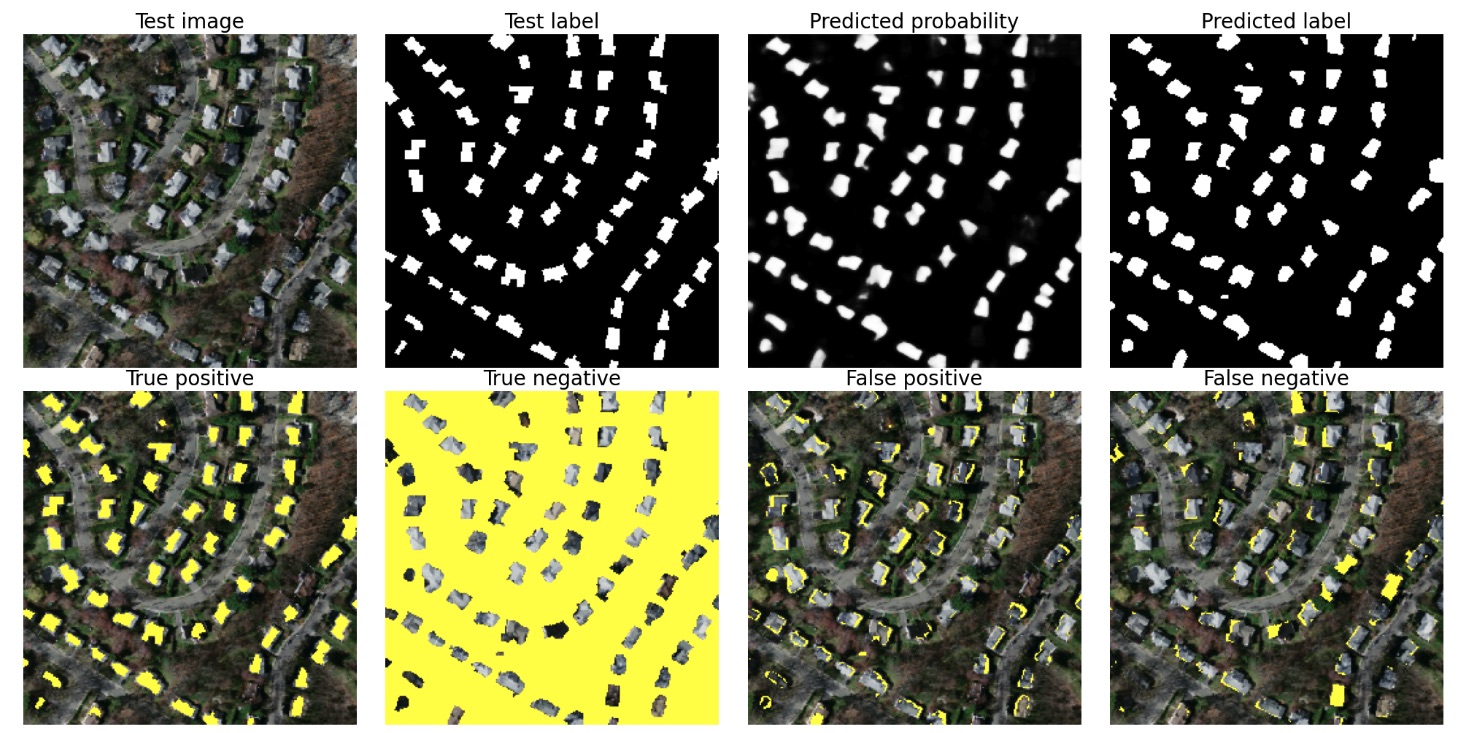

This project is all about teaching a neural network to spot buildings from aerial images, and it was honestly one of the most exciting challenges I’ve tackled! Using a U-Net architecture (a powerful model designed for image segmentation), I trained the network to differentiate between buildings and everything else—like trees, roads, and backgrounds.

By combining clever techniques like spatial dropout, a custom loss function (Binary Cross-Entropy + Dice Loss), and a bit of patience, I achieved a 94.7% accuracy and a 76.7% Dice score. This means the model does a solid job of identifying and mapping building footprints, even when dealing with the tricky imbalance of having way more background pixels than buildings.

For a more detailed look at the project,

check out my Medium article! 🚀

Check out my GitHub repo for the full code and feel free to explore how I put it all together. If you're curious about aerial image segmentation, this is a great place to start!

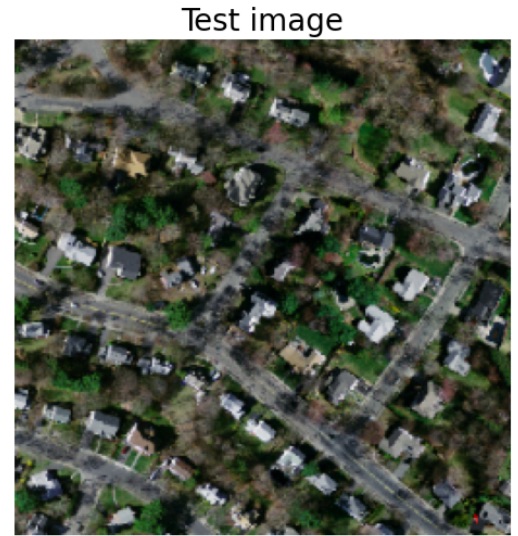

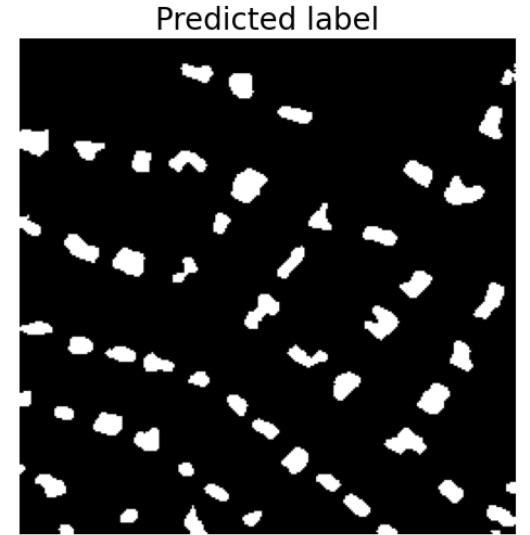

Check out the slider below to compare an original image to a predicted image by the model.